Fair Lending Analysis

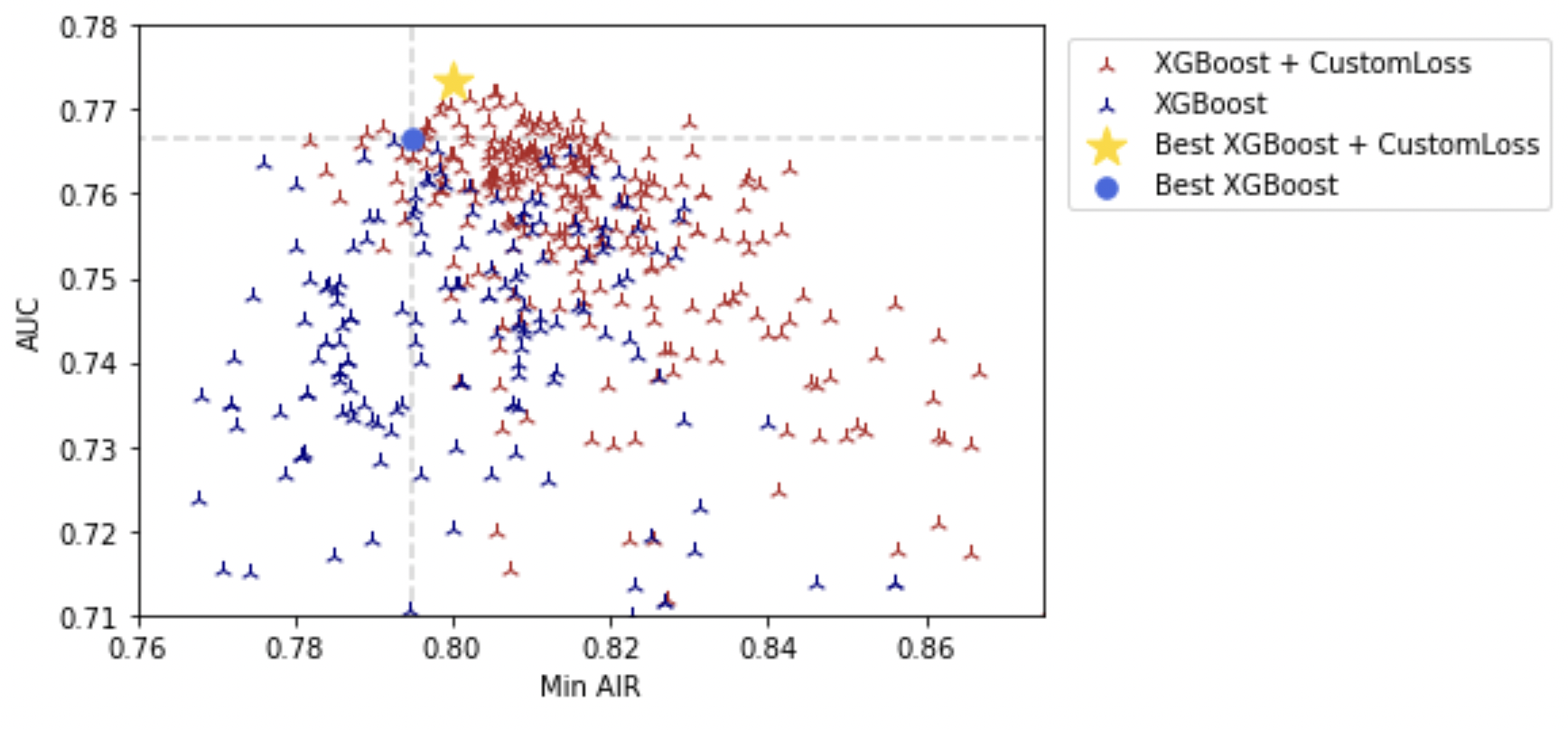

Identify and overcome tradeoffs between performance and disparity.

FairPlay’s automated fairness-as-a-service solutions offer lenders a competitive advantage by enabling quick, reliable, and affordable evaluations of new models, products, data sources, and policy changes for fairness. This allows lenders to swiftly and cost-effectively adapt their credit strategies while maintaining fair lending practices. Furthermore, FairPlay’s fairness optimization technologies help lenders boost profits and promote greater inclusion. Designed to accommodate any scale or complexity, FairPlay’s technologies present a cost-effective alternative to traditional hourly consultancies.

No! No! While underwriting models that exclude protected attributes like race and sex may seem fair on the surface, they can still inadvertently perpetuate discrimination. This is because other non-protected factors, such as credit history or employment status, might be correlated with protected attributes due to historical and societal biases. Therefore, seemingly neutral models can still produce disparate impacts on protected groups. It is important to continuously monitor and adjust underwriting models to ensure fairness and equal opportunity for applicants.

ECOA prohibits the express consideration of protected status when making credit decisions. ECOA does not prohibit – and arguably requires – consciousness of protected status during model development for the purpose of building decisioning systems that are unbiased.

FairPlay’s loss function “teaches” the model it is training about the distributional properties of each protected class. During model training, the loss function “punishes” the model for differences in the distribution of outcomes for protected class applicants relative to control class applicants The FairPlay loss function treats disparities for protected class applicants as a form of model error, just as inaccurate prediction of default risk would be regarded as a form of error, that prompts re-learning. This feedback results in the model “learning” to set the weights on its variables at levels that maximize their ability to predict default risk while also minimizing disparate impacts on protected classes.

Because the model has learned to set these variable weights to be “fair” to protected groups from the outset, the model does not require protected status information as an input at decision time.

FairPlay uses artificial intelligence to replicate, explain and optimize your decisions. Our proprietary loss function is used to train models to be more accurate and fair. This results in risk-neutral growth and improved outcomes for historically underserved populations.

Models typically have a single target, for example an underwriting model’s target is typically to predict which consumers will default on a loan. FairPlay models are unique in that they can be given multiple targets, for example: maximize approval rates while minimizing defaults and disparities for protected groups. The end result is a model that achieves all 3 goals of high approval rates, low risk, and enhanced inclusion.

FairPlay’s solutions are applicable to the full spectrum of consumer and small business credit and liquidity products, including mortgages, auto loans, installment loans, credit cards, cash advance, lines of credit, as well as earned-wage-access and Buy Now Pay Later products.

We optimize credit models to more effectively risk-assess underrepresented sub-populations using AI fairness techniques.This enables improved underwriting and pricing for protected class applicants.

FairPlay’s loss function “teaches” underwriting models about the distributional properties of protected class applicants. During model training, the loss function “punishes” the model for differences in the distribution of outcomes for protected class applicants relative to control class applicants The FairPlay loss function treats disparities for protected class applicants as a form of model error, just as inaccurate prediction of default risk would be regarded as a form of error, that prompts re-learning. This feedback results in the model “learning” to set the weights on its variables at levels that maximize their ability to predict default risk while also minimizing disparate impacts on protected classes.

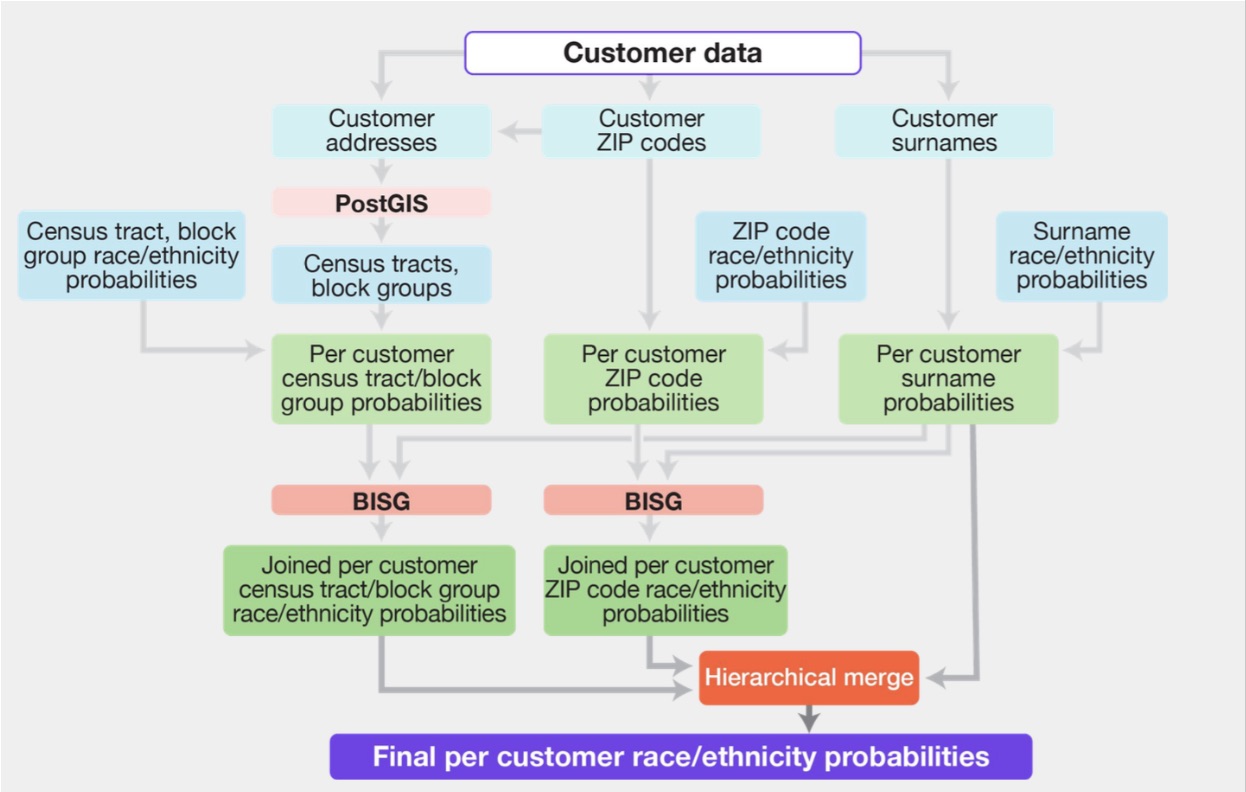

We use demographic imputation methodologies, such as BISG (Bayesian Improved Surname Geocoding) and BIFSG (Bayesian Improved First and Surname Geocoding), to estimate the race and ethnicity of individuals based on their names and geographic locations. Our demographic imputation solutions support financial institutions in adhering to the requirements of the Fair Housing Act, Equal Credit Opportunity Act, and other regulations. The BISG and BIFSG methodologies have limitations, but both are acknowledged and utilized by federal financial regulators, including the Consumer Financial Protection Bureau (CFPB).

There are many different definitions of fairness and many of them conflict with one another.

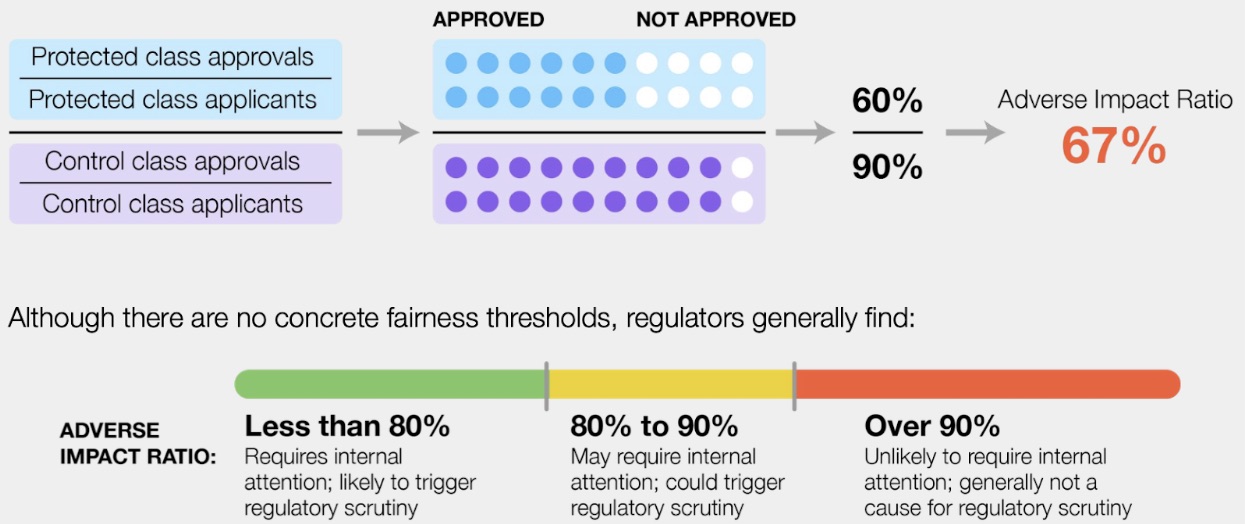

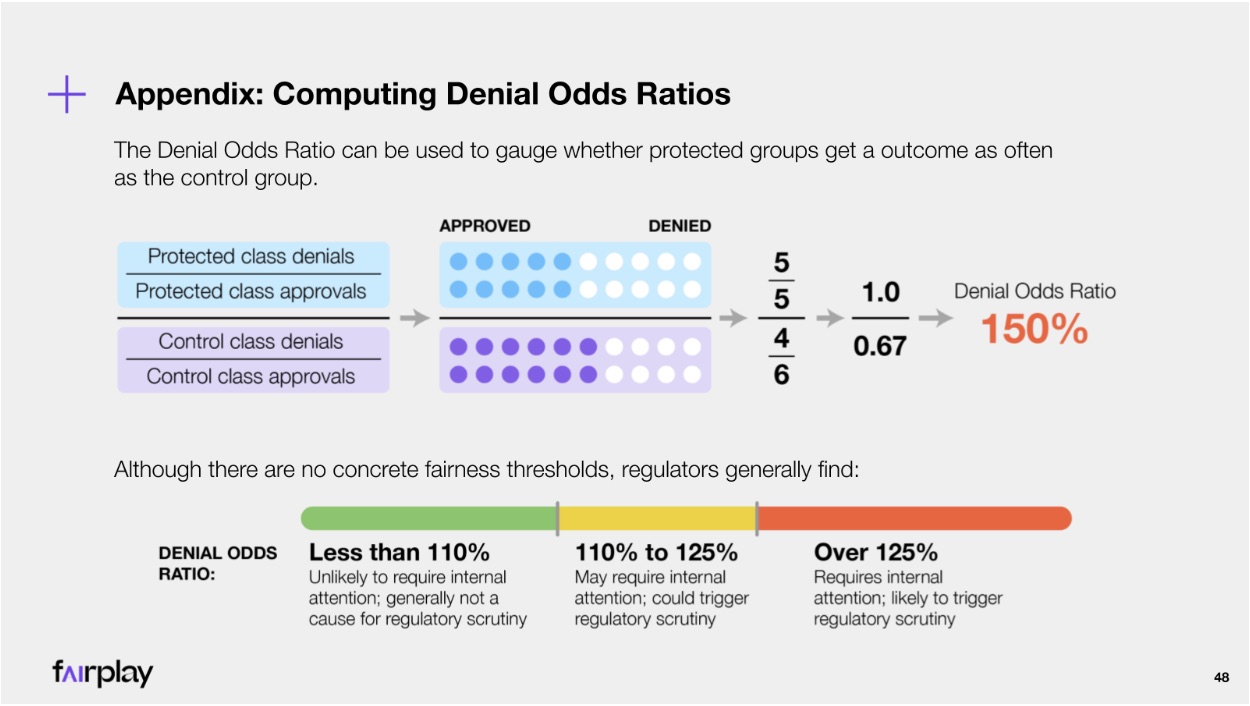

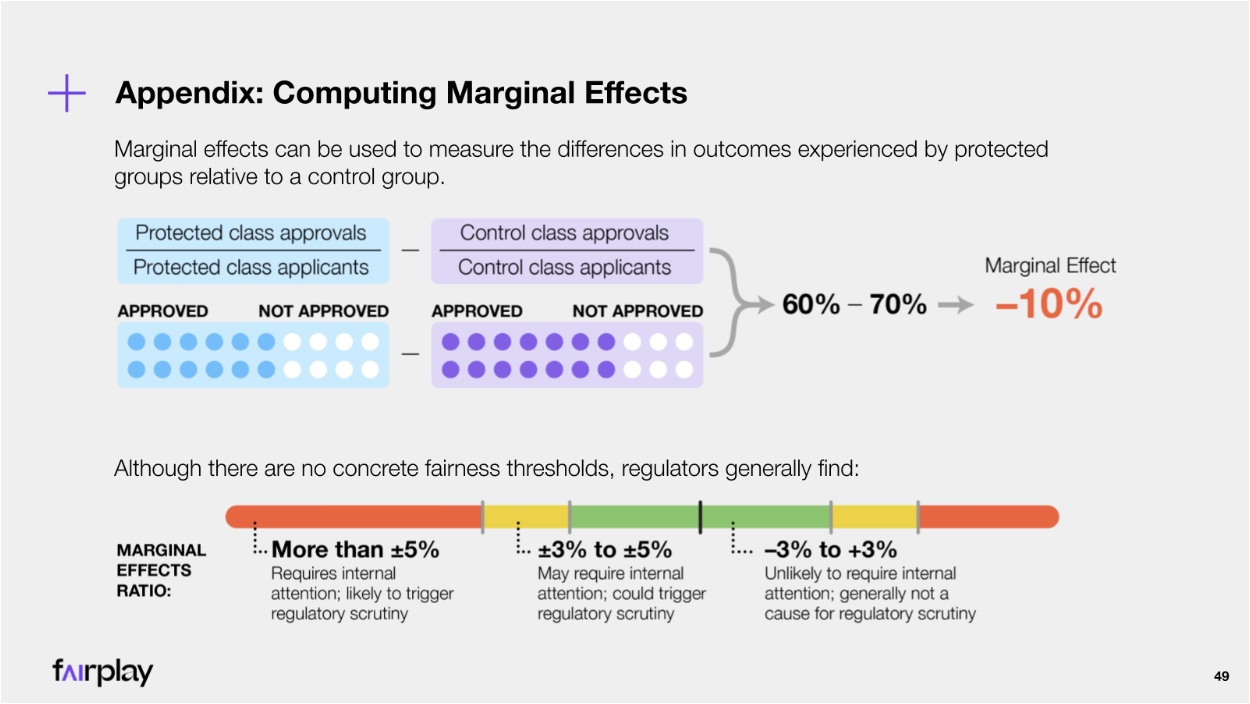

While defining “fair” can be complex and multifaceted, in the context of consumer finance, there are several established metrics of fairness, including the adverse impact ratio (AIR), denial odds ratio, and marginal effects, which are often used by courts, regulators, and industry practitioners to assess fairness and potential disparate impact.